.avif)

.avif)

Pioneering AI research by exploring underinvestigated strategies to secure humanity’s future.

Progress in AI is accelerating. Alignment research isn’t keeping up. Most solutions focus on narrow paths. We believe the space of possibilities is larger and largely unexplored.

AE.Studio is the team that's trusted by top founders and Fortune 10 executives to win the future—faster.

AE Studio is the team that's trusted by top founders and Fortune 10 executives to win the future—faster.

We investigate strategies at the edges of mainstream AI safety thinking.

Study how humans naturally cooperate. Apply it to design more aligned AI behaviors.

Leverage brain-computer interfaces to better model decision-making and agency.

Build bipartisan consensus to create durable AI safety policies.

Protect insiders who raise concerns. Surface critical information early.

Channel capital toward underexplored, high-leverage research directions.

Investigate how awareness and self-modeling might guide safer AI.

Built different by design.

.avif)

We've been focused on this for a decade with no external distractions.

Modern society is too focused on near term gains instead of the long term consequences. We've invested time and money into something we believe is crucial to our species in the long run.

We described our alignment research agenda, focusing on neglected approaches, which received significant positive feedback from the community and has updated the broader alignment ecosystem towards embracing the notion of neglected approaches. Notably, some of the neglected approaches we propose could have a negative alignment tax, a concept we elaborate on in our LessWrong post "The case for a negative alignment tax" that challenges traditional assumptions about the relationship between AI capabilities and alignment.

We also discussed our approach to alignment, AI x-risks, and many other topics in a couple of podcasts:

Biologically Inspired AI Alignment: Exploring Neglected Approaches with AE Studio's Judd and Mike

Is Artificial Intelligence a Threat to Humanity? Judd Rosenblatt Discusses AI Safety and Alignment @ Superhuman AI

We published a LessWrong post explaining the concept of self-other overlap, a method inspired by mechanisms fostering human prosociality that aligns an AI’s representations of itself and others. It also shows our initial results with this methodology on a reinforcement learning model. We posted the highlights on this Twitter thread.

“Not obviously stupid on a very quick skim… I rarely give any review this positive… Congrats.” - Eliezer Yudkowsky

We hosted a panel discussion at SXSW about the path to Conscious AI, highlighting the importance of AI consciousness research, and discussed it in a LessWrong post. And we had also hosted two other SXSW panels on BCI in the past. We’re now regularly collaborating with the top thinkers in this space (if this sounds like you, we encourage you to reach out to us).

Our paper on LLM reason-based deception was presented at the ICLR AGI workshop and can be found in arXiv.

We've created accessible content on important alignment concepts, including a DIY implementation of RLHF and a video on Guaranteed Safe AI.

We published a paper on the "Unexpected Benefits of Self-Modeling in Neural Systems", where neural networks learn to predict their internal states as an auxiliary task, which changes them in a fundamental way. This work was presented at the Science of Consciousness 2024 and Mila’s NeuroAI Seminar. There are also a lot of interesting discussions on the implications of this work and general public excitement about it in the Twitter thread we released with the paper.

We ran a comprehensive survey of over 100 alignment researchers and 250+ EA community members. This survey provided valuable insights into the current psychological and epistemological priors of the alignment community. Notably, we found that alignment researchers don’t believe that we are tracking to solve alignment, and relatedly, that current research doesn't adequately cover the space of plausible approaches to alignment, which reinforces our perspective of the importance of pursuing neglected approaches. Along with the results, we released an interactive data analysis tool so everyone can explore the data independently.

We published a thought piece making the case for more startups in the alignment ecosystem, arguing that the incentives and structures of startups can be particularly effective for alignment work, as well as driving new funding and talent into the space. We’ve also made some alignment-focused investments, which go into AE's equity plan for team members with the new goal of getting everyone diversified exposure to an exponentially larger post-human economy, while simultaneously advancing AI safety.

Earlier on, we published a paper on arXiv titled "Ignore Previous Prompt: Attack Techniques For Language Models", which won the best paper award at the 2022 NeurIPS ML Safety Workshop.

We've sponsored various events focused on AI safety and whole brain emulation, including Foresight Institute events and a Brainmind event. We've also funded research by Professor Michael Graziano to continue his research on attention schema theory and Joel Saarinen for his value learning research.

Our original theory of change involved enhancing human cognitive capabilities to address challenges like AI alignment. While we're now exploring multiple approaches to AI safety, we continue to see potential in BCI technology. If AI-driven scientific automation progresses safely, we anticipate increased investment in BCI research. We're also advocating for government funding to be directed towards this approach, as it represents an opportunity to augment human intelligence alongside AI development.

While our emphasis has shifted towards AI alignment, our work in Brain-Computer Interfaces (BCI) remains an important part of our mission to enhance human agency:

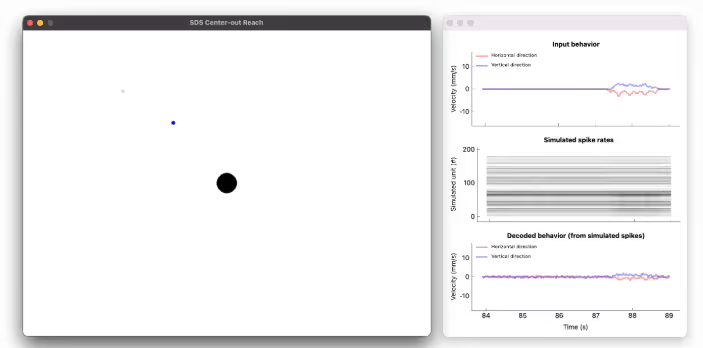

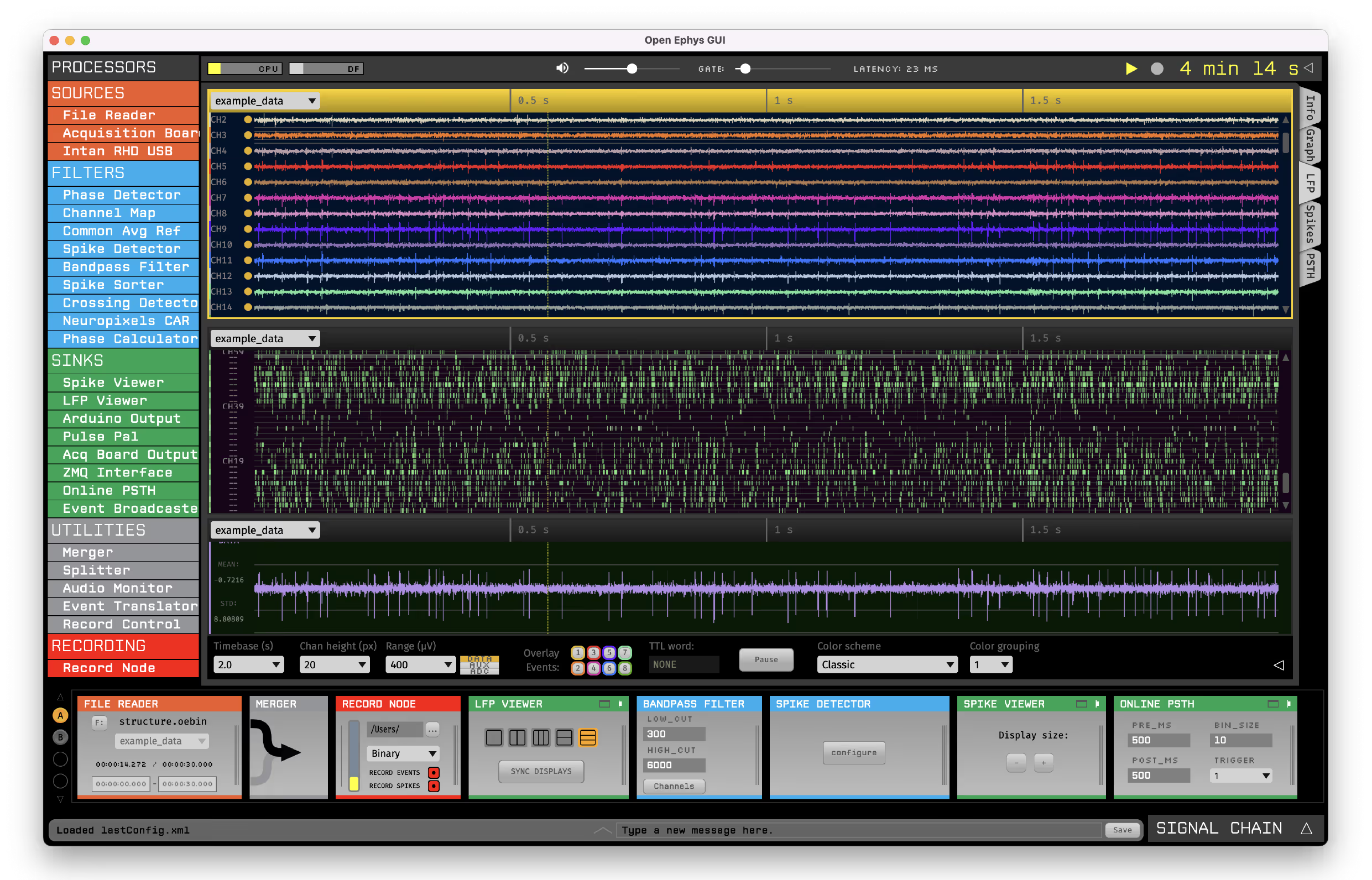

We've developed and open-sourced several tools for the propel and democratize BCI development, like the Neural Data Simulator that facilitated the development of closed-loop BCIs, and the Neurotech Development Kit to model transcranial brain stimulation technologies. These tools have contributed to lowering barriers in BCI research and development.

We won first place in this challenge to develop the best ML models to predict neural data topping the best research labs in the space.

We led the development of widely accepted neuro metadata standards and tools, supporting open-source neuro-analysis software projects like MNE, OpenEphys, and Lab Streaming Layer.

We've joined forces with leading BCI companies like Forest Neurotech and Blackrock Neurotech, helping to bridge the gap between academic research and industry applications.

We've developed secure methods for analyzing neural data and training privacy-preserving machine learning models, addressing crucial ethical considerations in BCI development.

Our original theory of change involved enhancing human cognitive capabilities to address challenges like AI alignment. While we're now exploring multiple approaches to AI safety, we continue to see potential in BCI technology. If AI-driven scientific automation progresses safely, we anticipate increased investment in BCI research. We're also advocating for government funding to be directed towards this approach, as it represents an opportunity to augment human intelligence alongside AI development.

While our emphasis has shifted towards AI alignment, our work in Brain-Computer Interfaces (BCI) remains an important part of our mission to enhance human agency:

We've joined forces with leading BCI companies like Forest Neurotech and Blackrock Neurotech, helping to bridge the gap between academic research and industry applications.

We won first place in this challenge to develop the best ML models to predict neural data topping the best research labs in the space.

Our original theory of change involved enhancing human cognitive capabilities to address challenges like AI alignment. While we're now exploring multiple approaches to AI safety, we continue to see potential in BCI technology. If AI-driven scientific automation progresses safely, we anticipate increased investment in BCI research. We're also advocating for government funding to be directed towards this approach, as it represents an opportunity to augment human intelligence alongside AI development.

While our emphasis has shifted towards AI alignment, our work in Brain-Computer Interfaces (BCI) remains an important part of our mission to enhance human agency:

At AE Studio, we tackle ambitious, high-impact challenges using neglected approaches.

Starting with Brain-Computer Interfaces (BCI), we bootstrapped a consulting business, launched startups, and reinvested into frontier research leading to collaborations with Forest Neurotech and Blackrock Neurotech.

Today, we’re 160 strong: engineers, designers, and data scientists focused on increasing human agency.

Now, we’re applying our proven model to AI alignment, accelerating safety startups like Goodfire AI and NotADoctor.ai to tackle existential risks.

Our data scientists - from places like Stanford, CalTech and MIT - are highly collaborative, efficient and pragmatic.

Humanity is too focused on capitalism in a competitive landscape. We've invested time and money into something we believe is crucial to our species in the long run.

We described our alignment research agenda, focusing on neglected approaches, which received significant positive feedback from the community and has updated the broader alignment ecosystem towards embracing the notion of neglected approaches. Notably, some of the neglected approaches we propose could have a negative alignment tax, a concept we elaborate on in our LessWrong post "The case for a negative alignment tax" that challenges traditional assumptions about the relationship between AI capabilities and alignment.

We also discussed our approach to alignment, AI x-risks, and many other topics in a couple of podcasts:

We described our alignment research agenda, focusing on neglected approaches, which received significant positive feedback from the community and has updated the broader alignment ecosystem towards embracing the notion of neglected approaches. Notably, some of the neglected approaches we propose could have a negative alignment tax, a concept we elaborate on in our LessWrong post "The case for a negative alignment tax" that challenges traditional assumptions about the relationship between AI capabilities and alignment.

We described our alignment research agenda, focusing on neglected approaches, which received significant positive feedback from the community and has updated the broader alignment ecosystem towards embracing the notion of neglected approaches. Notably, some of the neglected approaches we propose could have a negative alignment tax, a concept we elaborate on in our LessWrong post "The case for a negative alignment tax" that challenges traditional assumptions about the relationship between AI capabilities and alignment.

Lorem ipsum dolor sit amet, consectetur adipiscing elit sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim

Lorem ipsum dolor sit amet, consectetur adipiscing elit sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam.

.png)

Learn more about all the members of our team and why we do what we do.

.png)